The Model Context Protocol (MCP) is emerging as a foundational standard for orchestrating and controlling data workflows in AI-native stacks. By moving beyond fragile APIs and custom glue code, MCP enables large language models (LLMs) and AI agents to securely connect to data sources and tools—and to automate entire extract-transform-load (ETL) processes in real time.

Why ETL Needs a New Model

Traditional ETL pipelines rely on static scripts or “ETL as code.” While effective, these workflows are often brittle, hard to govern, and slow to adapt when requirements change [1][3].

AI-native stacks demand something different:

- Dynamic orchestration, where AI agents can discover and invoke new tools at runtime.

- Fine-grained governance, where permissions are enforced centrally rather than embedded in fragile client code.

- Unified observability, with audit trails and telemetry across all integrations.

MCP provides this foundation by defining a protocol rather than a fixed taxonomy of functions [1][2].

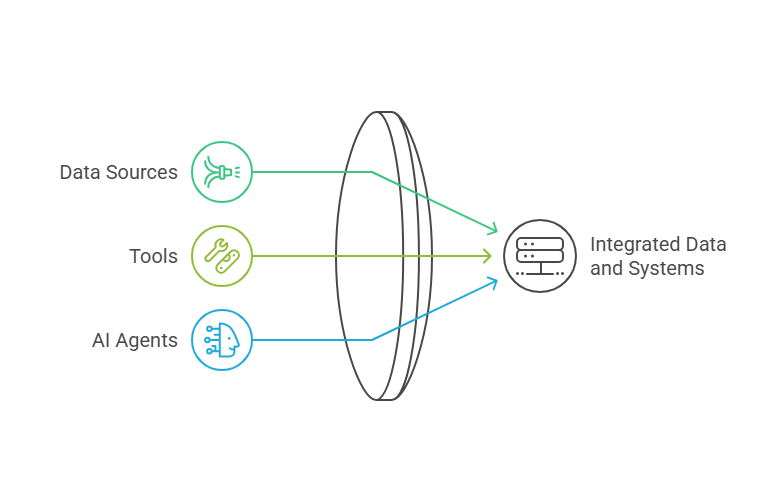

How MCP Reframes ETL as a Protocol

With MCP, each data system exposes its capabilities through an MCP server, while AI agents or clients consume them securely:

- Discovery at runtime: Agents can query servers for available resources and operations (e.g., SQL read-only queries, file access, or API calls) [2][3].

- Server-enforced permissions: A server can expose only the safe subset of operations, such as SELECT-only queries on production data [2][4].

- Composable workflows: Agents can chain server capabilities into orchestrated pipelines—extracting from a database, enriching via an API, and writing to cloud storage—all mediated by MCP [3][5].

This shifts ETL from custom glue into a standardized interaction model.

Security and Governance in AI-Driven ETL

ETL orchestration through AI agents also introduces new risks. MCP addresses these with:

- Capability Scoping: Explicitly declaring which tools and prompts are exposed [2][3].

- Authorization Hooks: Integrating with enterprise identity systems for access control [1][2].

- Auditability: Built-in telemetry options to trace which agents accessed which resources [2].

But risks remain—prompt injection and tool abuse are highlighted in the OWASP Top 10 for LLM applications [6]. Research also identifies vulnerabilities like tool poisoning, which must be mitigated through governance and monitoring [7].

Real-World Adoption and Experiments

Although MCP was only released in late 2024, experiments are already demonstrating its potential for orchestrating ETL workflows:

- Claude Desktop: Exposes local file systems and developer tools, allowing AI agents to automate data wrangling tasks [1].

- Community Servers: Dozens of examples exist for databases, APIs, and analytics engines, showing how MCP servers can wrap ETL-like functionality [4][5].

- Enterprise Interest: Microsoft and others describe MCP as the “USB-C of AI apps,” pointing toward standardized AI integration for data workflows [8].

From “ETL as Code” to “ETL as Protocol”

The critical advantage is control. Instead of scattering permissions and orchestration logic across scripts and APIs, MCP centralizes them:

- Dynamic orchestration: Agents adapt pipelines on the fly, assembling ETL processes from discovered capabilities [3][5].

- Governance by design: Servers enforce what is possible; clients only consume what is exposed [2].

- Compliance at scale: Unified audit trails replace fragmented logs across disparate systems [2][6].

In other words: MCP has the potential to transform ETL into an AI-ready, governable, and flexible protocol.

Outlook: AI Agents as ETL Orchestrators

MCP was only introduced in November 2024, yet adoption momentum is strong [1][8][9]. If current trends continue, MCP could become the default orchestration layer for AI-driven ETL within 3–5 years.

The opportunity is clear: enterprises gain agility and governance by letting AI agents orchestrate ETL pipelines via a standardized protocol, rather than reinventing integrations for every system.

Quellen

[1]: https://www.anthropic.com/news/model-context-protocol „Introducing the Model Context Protocol – Anthropic“

[2]: https://modelcontextprotocol.io/specification/2025-06-18 „Specification – Model Context Protocol“

[3]: https://medium.com/%40tahirbalarabe2/model-context-protocol-mcp-vs-apis-the-new-standard-for-ai-integration-d6b9a7665ea7 „MCP vs APIs – Medium“

[4]: https://github.com/modelcontextprotocol/servers „Community Servers – GitHub“

[5]: https://github.blog/ai-and-ml/github-copilot/building-your-first-mcp-server-how-to-extend-ai-tools-with-custom-capabilities/ „Building your first MCP server – GitHub Blog“

[6]: https://owasp.org/www-project-top-10-for-large-language-model-applications/ „OWASP Top 10 for Large Language Model Applications“

[7]: https://arxiv.org/abs/2504.03767 „MCP Security: Risks and Defenses – arXiv“

[8]: https://www.reuters.com/business/microsoft-wants-ai-agents-work-together-remember-things-2025-05-19/ „Microsoft wants AI ‚agents‘ to work together and remember things – Reuters“

[9]: https://www.axios.com/2025/04/17/model-context-protocol-anthropic-open-source „Hot new protocol glues together AI and apps – Axios“